Developer creates massive isometric pixel-art map of New York City entirely with AI

Andy Koenen, a Google Brain engineer, unveiled isometric.nyc – a massive isometric map of New York City styled after classic games like SimCity 2000, created entirely using AI coding agents. The developer didn't write a single line of code himself, relying on Claude Code, Gemini CLI, and Cursor with Opus 4.5 and Gemini 3 Pro models.

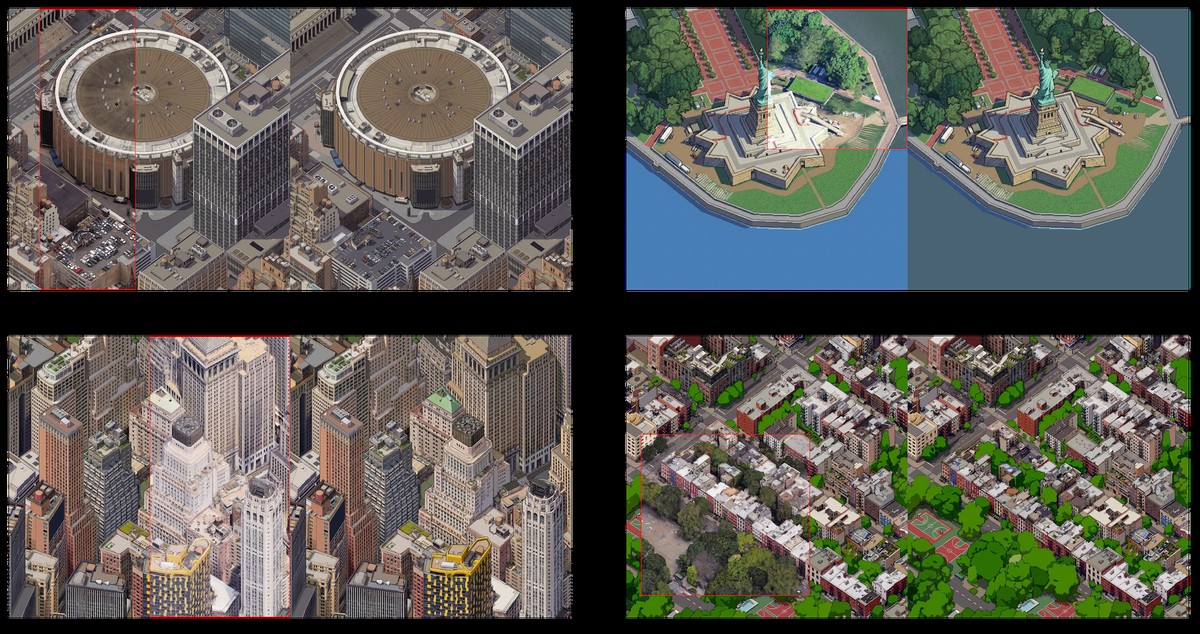

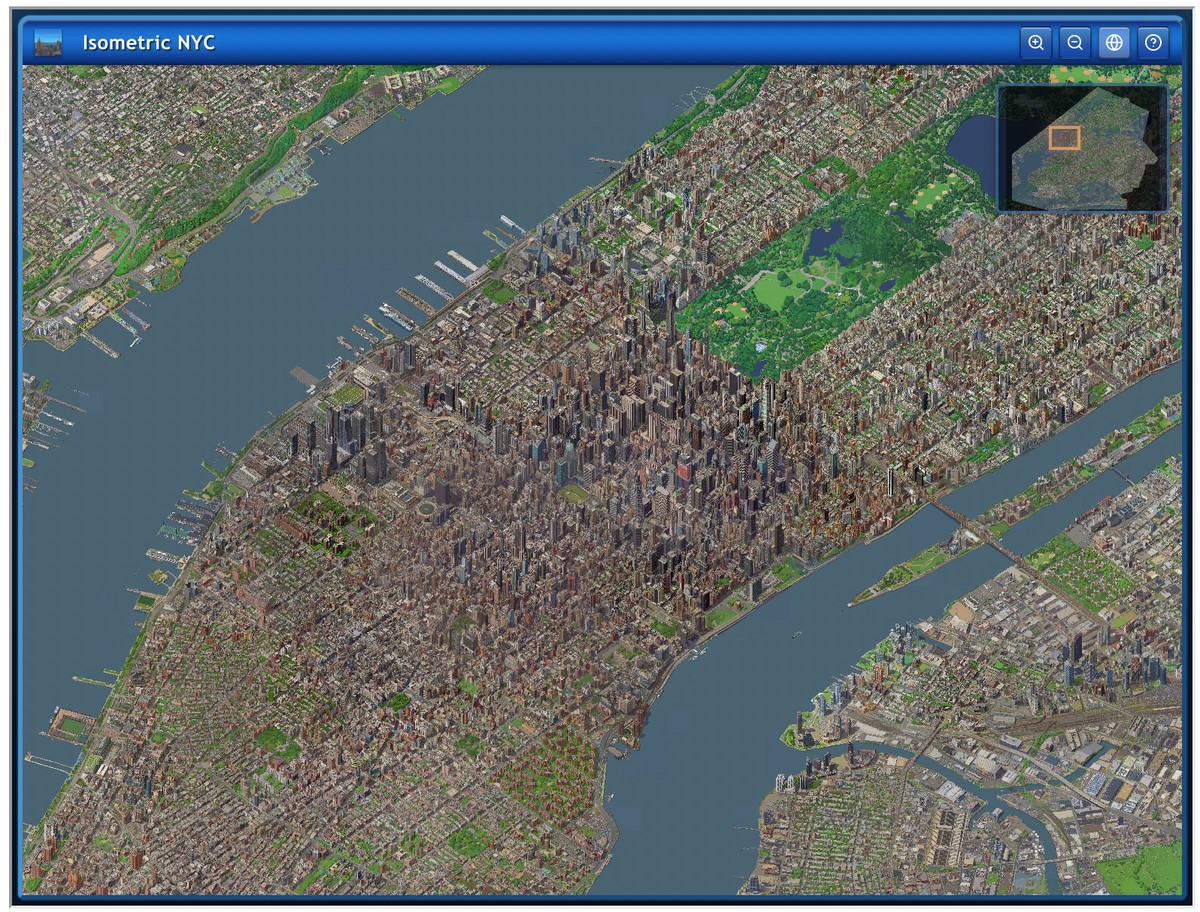

Koenen works on controllability of generative models at Google Brain, and before switching to software engineering in 2015, co-founded The M Machine, an electronic music project. The map idea struck when Koenen looked at Manhattan from his Google office balcony and imagined how the city would appear in the nostalgic aesthetic of late 90s games. The challenge was ambitious – transform satellite imagery into pixel-art tiles using generative models to create approximately 40,000 images.

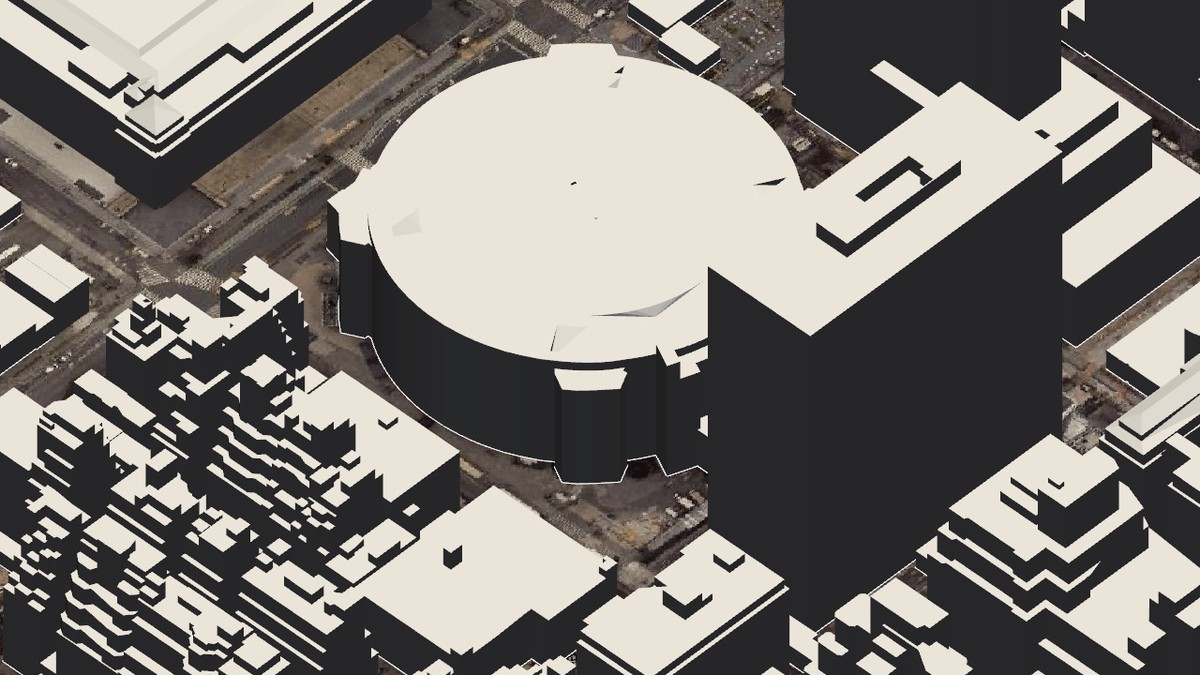

The developer started with a prompt to Gemini and relied exclusively on AI agents to write all subsequent code. Initially Koenen planned to use CityGML data to render "white box" individual tiles, but quickly switched to Google Maps 3D tiles API, which provided accurate geometry and textures in a single renderer. AI agents handled setting up coordinate systems and geometry labeling schemas – an area known for its complexity in GIS technologies.

For image generation, Koenen used Nano Banana Pro but encountered consistency, speed, and cost issues. The model produced the desired style only half the time, insufficient for large-scale production.

The solution was fine-tuning the Qwen/Image-Edit model on the oxen.ai platform – a process that took 4 hours and cost just $12. Koenen created a training dataset from roughly 40 pairs of input and output images, implementing an "infill" strategy for seamless generation of adjacent tiles.

Water and trees caused the biggest problems – models couldn't reliably generate these elements due to issues separating structure from texture. Koenen built numerous micro-tools to tackle the challenge:

automatic water corrector based on color selection

custom prompts with negative examples

export and import to Affinity for manual edits

Ultimately, significant time went into manually correcting edge cases.

To scale up, the developer moved models to rented H100 GPUs through Lambda AI, where an AI agent configured the inference server in minutes. The system generated over 200 tiles per hour at less than $3 per hour, making the entire project economically viable. Koenen ran generation overnight, checked results in the morning, and planned the next map sections.

The project revealed a key limitation of current AI – image models significantly lag behind text and code models in their ability to self-evaluate and correct errors.

Unlike coding agents that can run code, read errors, and fix them, image generation models cannot reliably detect seams or incorrect textures in their own outputs. This made quality control automation impossible.

The complete map is available at isometric.nyc and uses the OpenSeaDragon library to display gigapixel images at different zoom levels.

Koenen, who has experience working on gigapixel image viewing systems at Google Brain, noted that without generative models, such a massive art project would be physically impossible – hiring enough artists to manually draw every building in New York City is unrealistic.